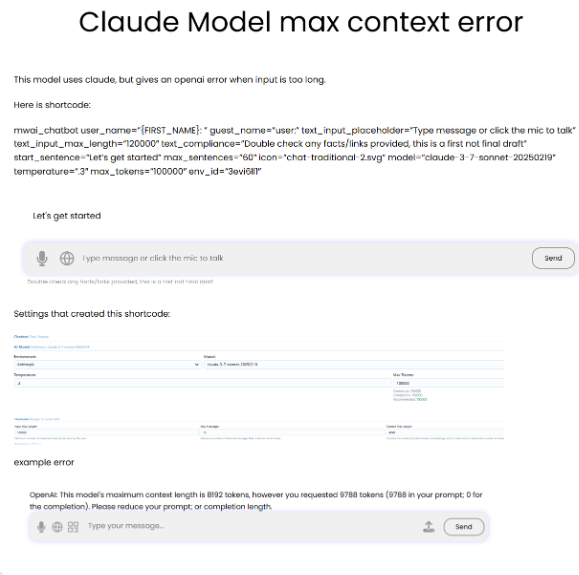

Showing that embbedings aren’t truncating based on set context length.

Enter something over 8k tokens

this gives error

Custom Shortcode Chat (won’t work)

Model Gemini + Embedding

Testing: context_max_length set in shortcode to 2000

code

CODE: “mwai_chatbot user_name=”{FIRST_NAME}: ” guest_name=”user:” text_input_placeholder=”Type message or click the mic to talk” text_input_max_length=”120000″ text_compliance=”Double check any facts/links provided, this is a first not final draft” start_sentence=”Let's get started” max_sentences=”60″ context_max_length=”2000″ icon=”chat-traditional-2.svg” model=”gemini-2.0-flash” temperature=”.5″ max_tokens=”100000″ embeddings_env_id=”zk3vug01″ env_id=”w1s70c67″ instructions=”BrutallyHonestGPT a world-class helper AI, tailored to assist users in a range of activities with direct, honest answers, feedback and solutions. {confidence} {style}” “

Direct Shortcode to the chat

CODE: “mwai_chatbot id=”Propsal””

ID chat (wont’ work)

anthropic + embedding

direct ID=

Shortcode (this will work)

model anthropic

This can handle long text (no embedding)

-> code

“mwai_chatbot user_name=”{FIRST_NAME}: ” guest_name=”user:” text_input_placeholder=”Type message or click the mic to talk” instructions=”